Musical mind reading has become a reality.

Recent research has shown it is possible to not only record the behaviour of your brain when imagining music, but use these scans to reproduce something close to the same sounds.

According to Michael Casey, Professor in the department of Computer Science at top US university Dartmouth College, one day musicians might be able to compose by simply thinking of a tune.

Speaking as a guest lecturer at Goldsmiths University of London this week, Prof Casey said: "Although music is a complex collection of information, test subjects responded better to it than simple sounds such as a sine wave."

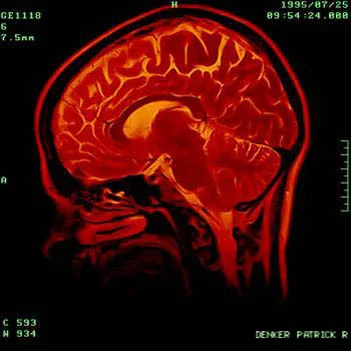

7 Tesla fMRI machine

He believed this was because the High-resolution 7-Tesla fMRI scanner used to scan the brain is incredibly noisy, making it difficult for subjects to distinguish basic tones. This problem is made worse because electrical devices are banned inside the scanner, so the sound is effectively “piped” into special headphones. But when listening to music the subjects naturally "fill in" some of the missing parts.

The scanner works by registering the level of oxygen in the blood in certain areas of the brain divided into 3D units called voxels. By testing a number of subjects on different genres of music they were able to see a pattern build up. From this they created a model which they used to read brain scans and reproduce the sounds the subject thought about - a kind of reverse engineering.

The words reproduced from the scans were unintelligible on their own, but next to the original word, it was unmistakably the same in terms of pitch, length, and number of syllables. So while there is clearly some way to go these results are quite astonishing.

Michael Casey is the James Wright Professor of Music, Professor in the Department of Computer Science, and former Chair of the Department of Music (2009-2013) at Dartmouth College. He directs the Bregman Media Labs, an interdisciplinary collective of faculty and students from multiple departments.